If you've been exploring the rapidly expanding world of Artificial Intelligence, you've undoubtedly encountered Large Language Models (LLMs) like ChatGPT, Claude, Gemini, and others. The initial experience can be mind-blowing, right? The way these models generate human-like text, answer questions, and even help with creative tasks is undeniably impressive. Many users are diving in, using these tools for everything from drafting emails to generating content ideas.

But as you spend more time with these base models – meaning, using them straight out of the box without any specialized customization or additional training – you might start to notice something. While the outputs are often fluent and coherent, they can also feel a bit... generic. A bit predictable. This isn't just a feeling; it's a reflection of how these powerful tools are built and the data they learn from. Today, I want to delve into why LLMs, in their standard form, act as the "Great Mean Reverters," and what it takes to push them beyond these common-denominator outputs.

Disclaimer: This piece is deeply technical, so if you want to nerd out and find some fringe knowledge, give it a read. It’s also long, so if you prefer audio check out the podcast version.

Peeking Under the Hood: What Makes LLMs Tick (and Lean Towards the Average)

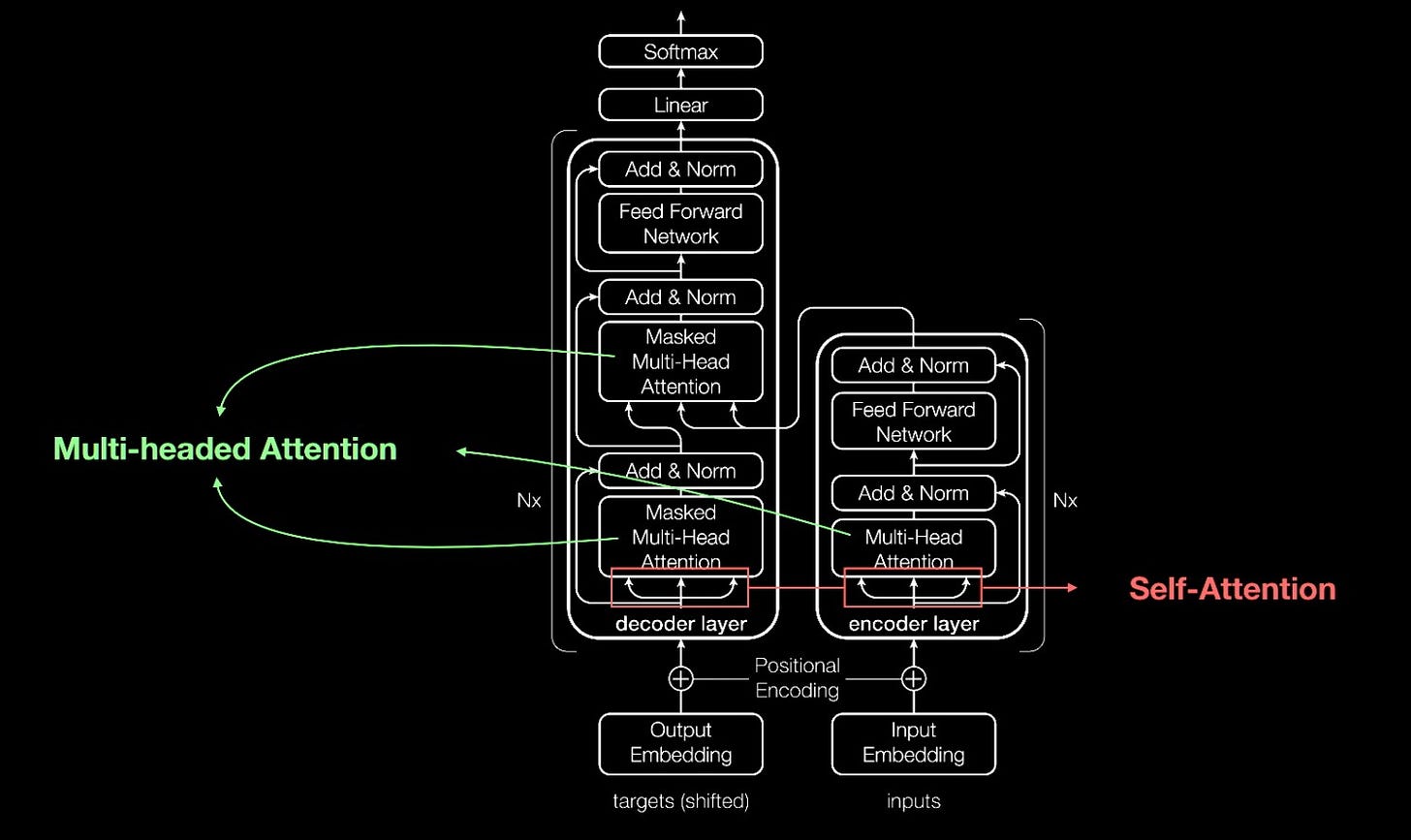

To understand this "mean reversion," it helps to have a conceptual grasp of how LLMs operate. We don't need to become AI researchers overnight, but understanding the fundamentals is key to using them effectively. The majority of today's leading LLMs are built on an architecture called a Transformer model. A truly revolutionary concept within the Transformer architecture is the "Attention Mechanism." Think about how you process information when reading a complex document. You don't assign equal importance to every single word. Instead, your brain naturally hones in on the critical sentences, keywords, and phrases that carry the core message. The Attention Mechanism allows the AI model to perform a computationally similar feat, dynamically assessing and weighing the importance of different segments of the input text. This helps it understand context and the intricate relationships between words, even if those words are separated by many others in the text. Models also employ "Self-Attention," a process that helps them understand how various words within the same sentence relate to each other, providing a much more sophisticated grasp of grammar, nuance, and meaning than was possible with earlier AI architectures. This enhanced ability to manage long-range dependencies and process information holistically is a primary reason why Transformer models have become the dominant force in language AI.

Attention to too Many Details

Recent research from Anthropic has taken the concept of self-attention further into the realm of mechanistic interpretability. Their findings suggest that neural networks appear to be grouping words, concepts, or data into clusters of similar vectors, effectively assigning specific meanings or roles to these groups. This can lead to an emergent property termed Monosemanticity, where individual neurons or parts of the network become dedicated to representing single, distinct concepts.

Lead Researcher Chris Olah at Anthropic, for instance, explains these complex ideas in detail (he discussed this around the 4-hour 50-minute mark in a Lex Fridman podcast interview). The core implication of this emergent behavior in pre-trained neural networks like LLMs is that their training deeply encodes specific meanings derived from the training data directly into the model's structure. This encoding is not superficial; it becomes part of the model's fundamental way of processing information. Therefore, unless this established encoding is deliberately modified through additional, targeted training aimed at introducing new meanings or refactoring the existing Monosemantic structures, the model will inevitably default to producing results based on its original, deeply ingrained understanding, reinforcing its tendency towards the mean of its training data.

GPTs, Backpropagation and Gradient Descent

Now, consider the "GPT" in a name like ChatGPT. It stands for "Generative Pre-trained Transformer." The "Generative" part tells us its main job is to create new text, not just to classify or analyze existing text. The "Pre-trained" component is crucial to our discussion today. This means that before an LLM is made available to the public or fine-tuned for specific applications, it undergoes an extensive initial learning phase. During this phase, it analyzes colossal datasets, typically scraped from the vast expanse of the internet – encompassing websites, digital books, articles, forums, and a plethora of other text-based content.

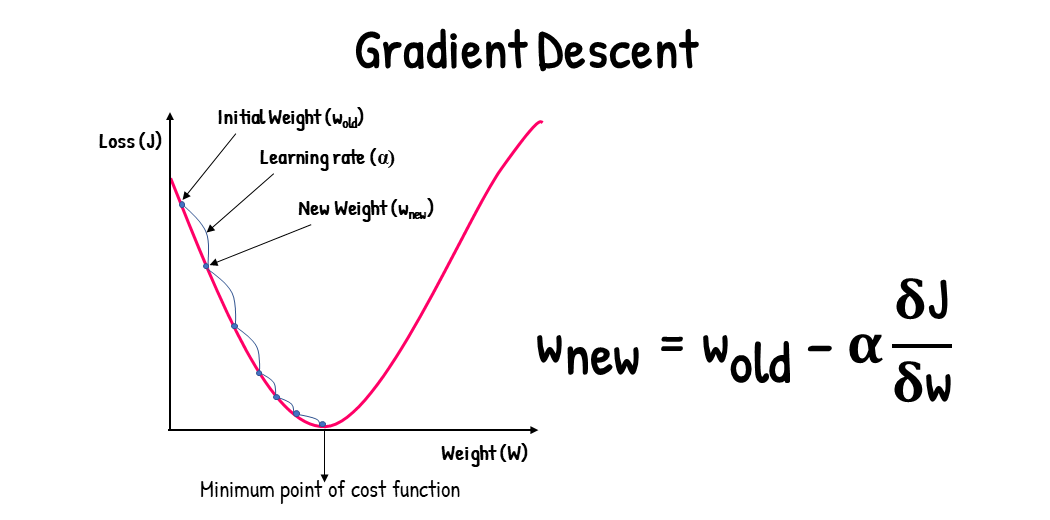

So, how does this "learning" actually occur? It's a sophisticated dance of algorithms, often described using terms like backpropagation and gradient descent. An analogy might help here: imagine a novice archer trying to hit a bullseye. Their first arrow (the model's initial attempt to predict or generate text) might be off the mark. The archer observes how far off the arrow landed and in which direction (the model calculates an "error" or "loss" by comparing its output to the desired output in its training data). Then, the archer adjusts their stance, aim, and release (the model tweaks its internal parameters, which can number in the billions) to try and reduce that error on the next shot. This iterative process of predicting, calculating error, and adjusting parameters, with the error information "propagating back" through the model's network and the parameters shifting in the "direction" (gradient) that promises the steepest reduction in error (descent), is how the model gradually refines its ability to generate coherent and contextually relevant text.

However, at its most fundamental operational level, what an LLM is doing when it generates text is performing Next Token Prediction. It examines the sequence of words (or "tokens," which can be parts of words) it has processed so far and then calculates the probabilities of all possible next tokens. It then typically selects the token deemed most statistically likely (or samples from a distribution of likely tokens), appends it to the existing sequence, and then repeats the entire process to generate the subsequent token, and so on, effectively building out sentences and paragraphs one piece at a time. This is an incredibly advanced form of statistical pattern matching, deeply rooted in the patterns observed in its training data. It’s vital to remember this: it's predicting likelihoods based on learned patterns, not achieving "understanding" in the human sense.

One of the clearest explanations of gradient descent and backpropagation and their role in enabling transformer architectures comes from founding researcher Ilya Sutskever (who has discussed these concepts, for example, on the Lex Fridman Podcast). The critical implication of these pre-training techniques is that next token prediction inherently follows the "path of least resistance." This path is dictated by what the pre-training process has modeled as the most probable sequence, based on the deeply aligned vector representations that words or groups of words have formed. This ties directly back to the earlier point about emergent Monosemanticity: the model defaults to the statistically common connections it has learned.

The Bell Curve Conundrum: Why Base LLMs Gravitate to the "Average"

This brings us directly to the heart of why standard LLMs tend to revert to the mean: their training data. As mentioned, the pre-training phase relies on an immense volume of text largely sourced from the public internet. When an AI model ingests this data, it converts words, phrases, and concepts into numerical representations, often referred to as vectors or embeddings. Through this complex mapping, terms and ideas that share similar meanings or are frequently used in similar contexts within the training data end up being grouped closely together in a vast, high-dimensional mathematical space. The AI, in essence, learns the statistical interrelationships between these linguistic elements, rather than their abstract, intrinsic meanings.

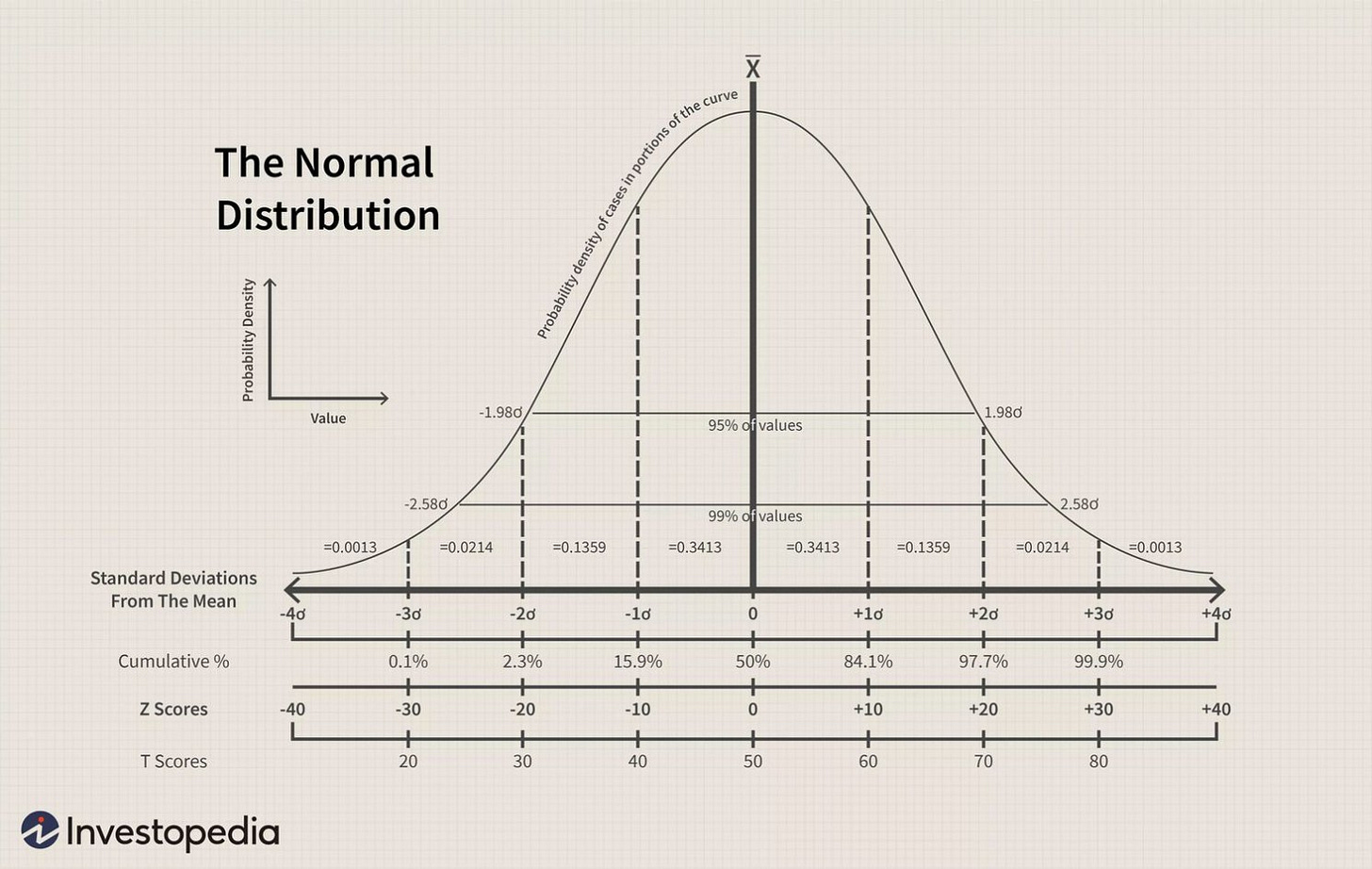

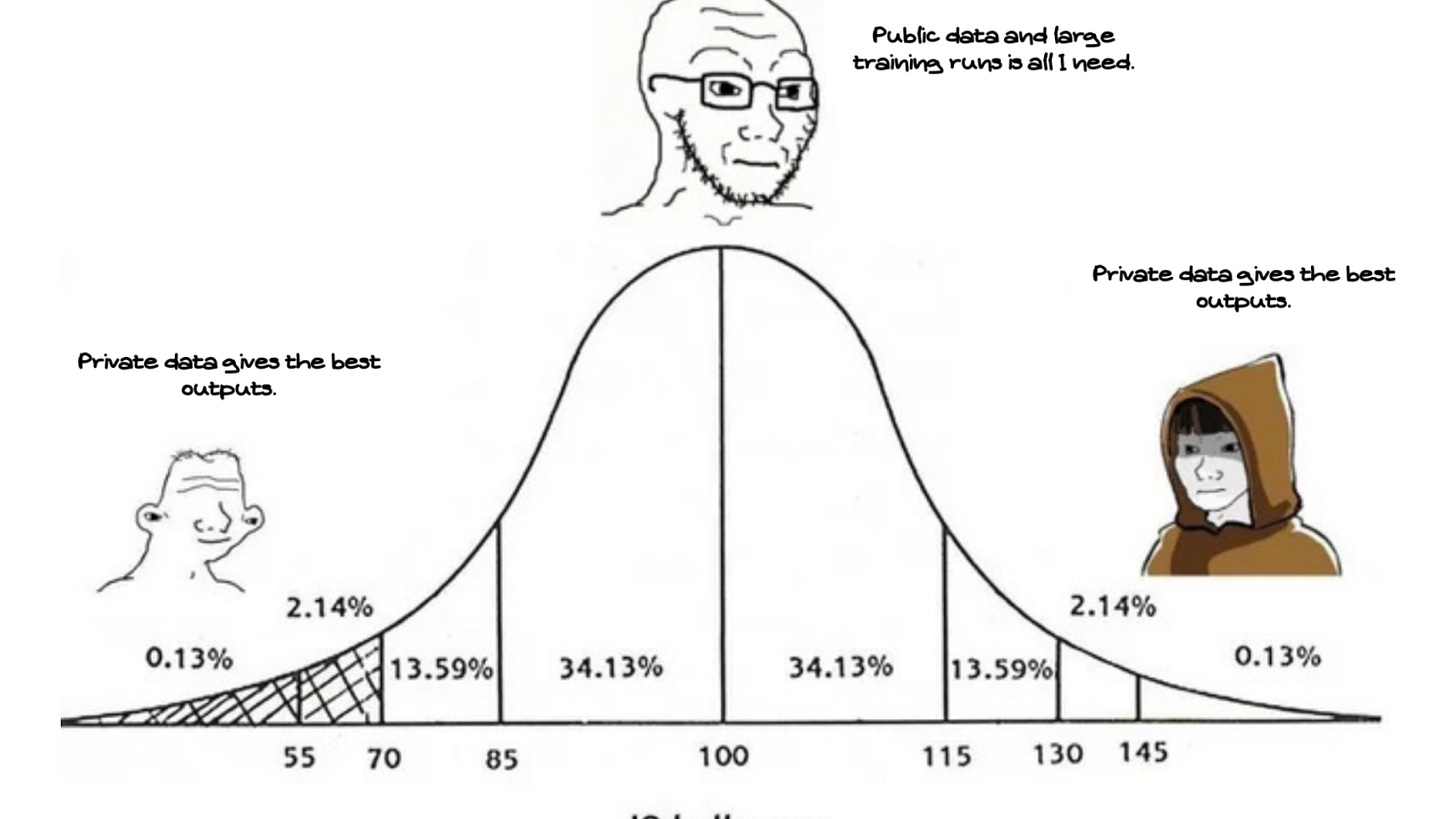

Now, try to visualize the distribution of all that publicly available online text. What does the overwhelming majority of this content represent? It reflects common language patterns, prevalent opinions, widely accepted facts, standard advice, and typical writing styles. If you were to chart the characteristics of this vast dataset, it would almost inevitably form a bell curve, also known as a normal distribution. The prominent peak of this bell, its widest section, signifies the average, the mean – this is where the highest concentration of data points resides. Consequently, this middle ground is where the LLM encounters the most examples during its training, and thus it's the area where the model develops its most robust and confident predictive capabilities.

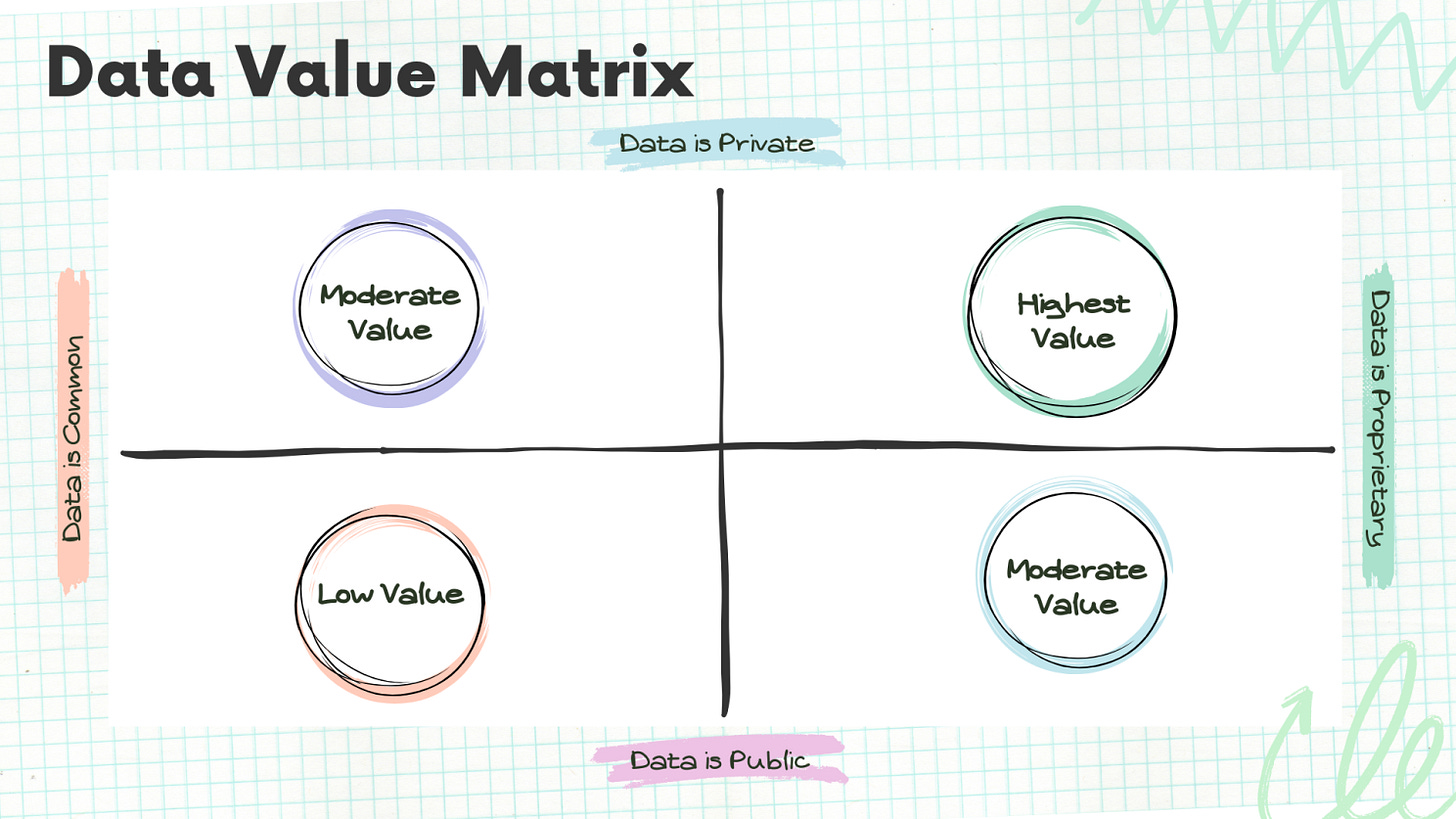

But what about information, styles, or ideas that are uncommon, novel, or highly specialized? Think about groundbreaking scientific theories before they are widely accepted, unique artistic expressions, or niche expertise. This type of content is inherently scarce on the internet compared to the vast ocean of more conventional information. Such data points would lie on the thin tails of that bell curve, perhaps representing the top few percentiles of originality, specificity, or unpopularity. Because this "exceptional" or "uncommon" data is so much rarer in the training corpus, the LLM has significantly less exposure to it. Furthermore, vast amounts of private, proprietary, or highly specialized data are complete blind spots for LLMs trained on public data – for example, the internal knowledge base of a specific company or the contents of a niche, gated community.

The statistical consequence of this training reality is profound and direct: because LLMs are predominantly trained on "average" or commonly available data, their outputs are statistically biased towards reflecting that average. They are far more likely to generate text that aligns with the characteristics found in the dense, middle part of the bell curve than to produce outputs that embody the unique, rare, or specialized qualities found at the tails. It’s crucial to understand that this isn't a "bug" or a simple flaw that can be easily patched. It's an inherent outcome of the statistical methods underpinning their training and the natural distribution of the publicly accessible data they learn from.

Breaking the Mold: Moving Beyond Mean Reversion

Understanding this tendency towards mean reversion is essential for anyone using LLMs and seeking outputs that are truly distinctive, innovative, or deeply tailored to a specific, uncommon need. If you're interacting with a base LLM without any form of customization, you are, in essence, tapping into the "wisdom of the crowd" as reflected in its training data. For many general tasks, this is perfectly adequate and incredibly useful. But when you need something that stands apart from the common, you'll likely find the default outputs somewhat uninspired or too generic for your purpose.

If the goal is to elicit uncommon, specialized, or highly original outputs from an LLM, simply using the base model with basic prompts often won't cut it. The underlying technology, the pre-training on generally available data, and the resultant deep encoding of "average" meanings will persistently pull its outputs back towards the mean.

To achieve those more exceptional results, several advanced techniques and approaches become necessary. These aren't always simple plug-and-play solutions but represent deeper levels of engagement with the AI:

Reinforcement Learning from Human Feedback (RLHF): This is a crucial technique used to fine-tune models beyond their initial pre-training. Human reviewers evaluate and rate the model's outputs, providing feedback that the model then uses to learn what kinds of responses are preferred. This helps align the model more closely with desired behaviors and styles, pushing it away from unhelpful or generic tendencies.

Advanced Prompt Engineering: While basic prompting gets you started, crafting highly specific, context-rich, and cleverly structured prompts can guide the LLM towards more nuanced and targeted outputs. This is an art and science in itself, involving iterative refinement and a deep understanding of how the model "thinks".

Specialized Training / Fine-tuning on Custom Datasets: For truly bespoke performance, models can be further trained (fine-tuned) on specific datasets relevant to a particular domain, style, or knowledge base. If you want an LLM to write in a very specific technical style or understand the jargon of a niche industry, feeding it high-quality examples of that specific type of text is key. This provides it with the "uncommon" data it didn't see (or saw little of) in its general pre-training.

Evaluations (Evals): Rigorous testing and evaluation frameworks are needed to measure how well a model is performing on specific tasks and whether its outputs are aligning with the desired level of quality, specificity, or uniqueness. Without robust evals, it's hard to know if your efforts to move beyond the mean are actually succeeding.

Alignment Documentation and Processes: For organizations or individuals seeking consistent, high-quality, and specialized outputs, developing clear alignment documents that define desired tones, styles, factual accuracy requirements, and ethical boundaries is crucial. These documents guide the prompting, fine-tuning, and RLHF processes to ensure the model's outputs consistently meet the specific, uncommon criteria.

Final Thoughts on Mean Outcome Tendencies of LLMs

Large Language Models are, without a doubt, transformative technologies. Their ability to process and generate language is opening up new frontiers in countless fields. However, it's vital to approach them with a clear understanding of their inherent characteristics. Left to their own devices, base LLMs trained on broad public data will naturally tend to produce outputs that reflect the average, the common, the mean of that data.

This isn't necessarily a drawback if your needs are general and a mean outcome is satisfactory to complete your job to be done.. But if you're looking for novelty, deep specialization, or outputs that truly break the mold, you'll need to go beyond simple interactions with the base model. Techniques like RLHF, sophisticated prompt engineering, specialized training on custom data, rigorous evaluations, and clear alignment strategies are the keys to unlocking an LLM's potential for generating genuinely uncommon and exceptionally valuable results. The power is there, but coaxing out the extraordinary requires a more deliberate and informed approach.

The good news is that moving beyond these base-level, mean-reverting outputs is possible. It requires a strategic approach, but the tools and methods exist. In a future article, I'll dive deeper into practical tips and actionable strategies you can employ to start coaxing those top-quartile, exceptional outputs from LLMs, helping you tailor their power to your unique and specific needs. Stay tuned for that!

What are your experiences with LLMs? Have you noticed this tendency towards "mean reversion"? What techniques have you found useful for eliciting more unique or specialized outputs? I'd love to hear your thoughts in the comments!